Server Replication

Vinchin provides a complete machine replication function. When starting a backup, real-time monitoring data will be sent to the backup server at the same time, ensuring that the backup disk of the backup machine is completely consistent with the production disk data of the host. When the host fails, seamlessly switch data and applications to the backup machine manually or automatically to ensure business continuity.

Before performing the replication operation, the agent needs to be installed on both the primary and backup servers. Please refer to Agents for specific installation steps.

Create Server Replication Job

To create server replication job, please go to Replication > Server page. There are 4 steps to create a server replication job.

Step 1: Replication source

Select the backup source host and choose one or more disks under the host.

And you can select the applications within the server which need to be monitored for the job. You can search for hosts by hostname or keywords.

Check association: After activation, when selecting a hard drive, its associated hard drive (such as RAID group/logical volume group) will be synchronously checked.

Step 2: Replication destination

Target Storage Default selection of storage devices with larger available space under all nodes. Customizable storage options are available, allowing backup data to be stored in specific locations according to specific needs.

Target Node If the selected storage has a corresponding node, will be automatically associated.

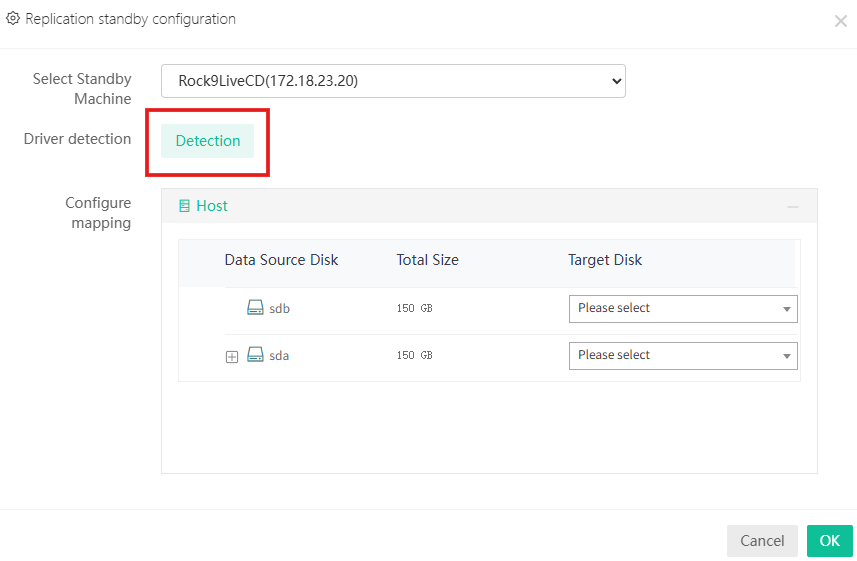

Replication standby configuration Click on Configure Standby Mapping button to configure the standby machine.

The backup machine does not require the installation of an operating system and related applications, and can be directly booted using the LiveCD/WinCE image, but the configured disk capacity should be ≥ the disk capacity of the source host.

Please go to Boot Image to learn more about Boot Image Download.

Driver detection Click on 'Driver Detection' to check if the backup machine is a heterogeneous platform and if it is missing a driver.

Step 3: Replication policy

General Strategy

The Throttling Policy is divided into customized policy and global policy. Customized policy is only effective for the current job and can set the start time, end time, and speed limit size for each day/week/month. When there is a permanent speed limit, only the speed limit size needs to be set. The global policy speed limit needs to be configured by going to Resource > Backup Resource > Throttling Policy. Speed limit policies can be used for multiple jobs. For specific Throttling Policy, please refer to Throttling Policy.

Transmission Strategy

Encrypted Transfer: Encrypt the backup data transmission channel. Disable it by default. Source-side compression: Compress the data before transmission to reduce network bandwidth usage and transmission waiting time.

Failover

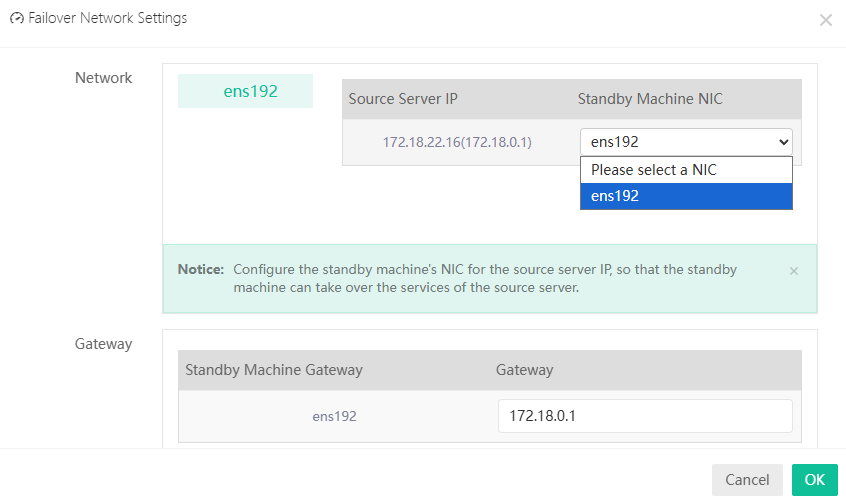

After enabling failover, when the production system configured for backup jobs fails or cannot continue to provide services, the failover backup machine can replace it to continue running and providing services. Click on 'Configure failover network' to set up business IP configuration and backup gateway configuration.

Application Failover:

When it is detected that the selected host triggers failover, it will also take over the configured applications.

This feature is turned off by default.

Application Failover:

When it is detected that the selected host triggers failover, it will also take over the configured applications.

This feature is turned off by default.

Automatic takeover:

- After enabling automatic takeover, if it is detected that the production host or application triggers the takeover condition, the data, application, and IP will be automatically switched on the backup machine, quickly pulling up the business and ensuring business continuity without interruption.

- The heartbeat failure time can be set, and when the continuous failure time of the heartbeat is greater than this value, automatic takeover will be triggered. Default is 30 seconds.

- If application failover is enabled, application monitoring can also be set here. If the monitoring application triggers the configuration condition, the host application failover will be immediately launched. Continuous fault frequency and fault monitoring interval can be set.

Script configuration Can set the time for script triggering:

- Before Backup

- After Backup

- Before takeover

- After takeover

- Takeover monitoring

Advanced strategy

Including Common Configuration, Cache Configuration, Resource Monitoring and Overload Protection.

Common configuration: (1) Storage data block size: The backup data can be set to a single data block storage size in the backup system and standby machine, with a default size of 64KB.

(2) IO replication mode If there is a large amount of memory remaining, configure asynchronous; if there is a small amount of memory remaining, configure synchronous, default to asynchronous. Asynchronous consumes relatively more memory than synchronous, but has relatively lower IO latency. When there is insufficient memory and memory resources cannot be requested, asynchronous will automatically switch to synchronous.

(3) Backup valid data Ignore damaged data blocks during backup to shrten backup time and improve resource utilization.

(4) Skip bad block backup When encountering disk bad sectors after enabling, the backup bad sector block will be skipped. This feature is turned off by default.

(5) Resume from Breakpoint Enabling resume from breakpoint will attempt to automatically restore the job and perform data verification in the event of a blocking failure (process crash, power outage restart, cache full, etc.) during the job period. Disabling this feature will directly stop the current job from running. This feature is enabled by default.

Cache Configuration (1) Agent file cache path Used for storing cached data, with the system default path.

(2) Agent file cache size When a large amount of real-time data is generated, the file cache size needs to be configured to be relatively large; When real-time data generation is small, the file cache size needs to be configured to be smaller. If the file cache size is exceeded, the task will stop, with a default size of 8GB.

(3) Memory cache If enabled, memory will be prioritized as cache to improve IO efficiency. This feature is enabled by default.

(4) Agent memory cache size The memory cache size can be set according to the memory size, with a default of 512MB.

Resource monitoring (1) Stop job threshold When the remaining memory falls below the set threshold, the job will stop continuous data protection.

(2) Continuous data protection degradation When the remaining memory is below the set threshold, the job will pause continuous data protection.

(3) Continuous data protection degradation thrshold When the memory reaches the threshold setting, the job is downgraded to incremental backup.

(4) Restore continuous data protection monitoring interval Set the interval for continuous data protection detection during job recovery, and the system will detect the usage of client memory resources based on the set time, and timely restore jobs to continuous data protection.

Overload protection If resource limitations are set on the backup node, backup jobs running on the corresponding node will be limited, and backup jobs will be subject to resource limitations by default. For jobs with higher priority, this setting can be enabled to ignore node resource limitations. This feature is turned off by default.

Step4: Review & Confirm

After completing the above-mentioned settings, you are able to review and confirm the settings in one screen. A job name can be specified for identification of the server replication jobs, and by clicking on the Submit button to confirm the settings and create the replication job.